Fifteen months later I’m back writing again and sharing my grading journey. If you are curious about the whole evolution, take a look at my earlier posts with this tag.

First, I’m sure you could imagine why I haven’t written in a while but I finally feel that I’ve moved past the overwhelming sense of burnout from this past year and am ready to continue my quest of revolutionizing the college math classroom. For the purpose of documenting progress and continued steps and changes I do want to share how I handled assessment over the last year.

To provide some context, all of the classes in which I used mastery grading last year were in a hybrid format (some students in classroom, some joining via Zoom) and naturally, the format of the class impacted the way I handled assessments as well. I continued to use and modify the grading system in the same Math and Politics class described in the previous posts and I also incorporated the system in a Statistics course which I taught twice last year. Starting from Spring 2020 (described in the last post), here’s an overview of the main features of my grading systems over the last year which I will follow with more thoughts.

Spring 2020: Math and Politics – 80 total objectives (31 procedural, 34 conceptual, 15 assignments/class)

Summer/Fall 2020: Math and Politics – 25 total objectives (20 content, 5 assignments/class)

January Term 2021: Statistical Data Analysis – 50 “Mastery Points” (34 from content, 16 from assignments/project components/class)

Spring 2021: Statistical Data Analysis – small content rearrangement but same overall structure as above

Math and Politics – 50 “Mastery Points” (40 from content, 10 from assignments/class)

You’ll notice a couple of big changes here. The first relates to the grain size of my objectives; ultimately it was too many and too clunky to deal with. I didn’t change the content of the course at all, I simply consolidated the objectives into broader categories and tested them on individual quizzes rather than collective exams. The second is this notion of “Mastery Points” which refers to my shift from a binary system (you either did or did not meet the objective) to a three-tier system of “Mastery/Intermediate/Beginner” which corresponded to 2, 1, or 0 “Mastery Points”, respectively.

Consolidating Objectives

For my Math and Politics class, I consolidated the longer list of 65 content objectives into 20 content categories which was easy to do given my familiarity with the course material. For Statistics, I started using this approach which ended with 17 content categories. The process was a bit different in that the nature of the course content is more cumulative and so some of the categories were really just demonstrating a conceptual understanding of a wider range of prior content because this is something that can get lost when placing (and assessing) every topic in isolation. In my opinion, breaking down a semester-long course into 15-25 content categories or objectives is the way to go as it will feel (much) more manageable and it is perfectly fine if an objective or category encompasses multiple related ideas or sub-topics. Click below to see my categories and descriptions for each course.

Content Quizzes

Admittedly, my approach here was shaped by the move to online teaching. Starting in Summer 2020, in every course I had Moodle (our LMS) quizzes for each of the content categories as my main form of content assessment. For each of the categories, I had a pair of corresponding Moodle quizzes – one labeled “Practice” and one labeled “Mastery.”

The Practice quiz would be available immediately after covering the material in class and contained similar types of problems that they be expected to see on the Mastery quiz. The practice questions would be a mix of multiple choice, drag and drop definitions, fill in the blank, and numerical entry and with all of these questions, students could check the correctness of their answers in the system immediately. I intentionally set up the questions so that if a student’s answer was incorrect, the system would not show the correct answer as I encouraged students to make use of office hours and work together when they were stuck on a problem. These quizzes were optional in the sense that they didn’t “count” towards demonstrating understanding but of course highly recommended as the place to practice without being graded or penalized for mistakes, aka learning.

The Mastery quiz was scheduled ahead of time and once students opened the quiz, the quiz would be on a timer (length varied between 30 and 60 minutes) and all questions appeared as one “essay question.” In other words, there were no multiple choice or simple numerical entry questions as students had to type or write and scan all their work and reasoning to demonstrate understanding of the content. In my Math and Politics class, these quizzes happened during class time and in Statistics, these happened outside of class time (generally available for a day or two) due to the amount of content covered and class time needed, and in both structures students were allowed and encouraged to use their notes. With that being said, I’m not going to pretend that academic dishonesty wasn’t an issue because in both formats it certainly was. This concern warrants its own post but for now I’ll just say that it existed as it does with most classes and types of assessments.

I can report back that students enjoyed this quiz format because it allowed them to grow from the Practice quizzes and ask questions during office hours and then the smaller chunks of content on each quiz (as opposed to a traditional midterm) took some anxiety and pressure away from the assessments, along with the existence of tokens allowing them to retake and improve their scores. On that note, I’ll add that students had five digital tokens that could be used to retake quizzes or submit assignments late and there were a couple of instances in which students could earn an additional token through exceptional work and effort.

Two Tiers to Three Tiers

Why? In my binary system, I noticed many instances of a tricky middle ground and I wanted space to acknowledge growth and progress among students whose understanding was not quite there yet. I will add that reading and listening to experiences of other professors also helped – here’s a shoutout to the Mastery Grading community.

In practice, I still experienced instances of a tricky middle ground now between a 1 (“making progress”) and 2 (“mastery”) and some student feedback revealed they thought it was too difficult to get a 2 which highlights to me the importance of adding more metacognitive work because I don’t think it’s a case of me being “too tough” or setting “too high a bar” but rather students not necessarily knowing what they don’t know.

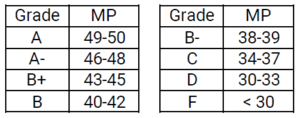

Using the tiers for quizzes along with different categories (project, assignments, class engagement, etc.) allowed me to put everything on the same scale of “Mastery points” which I personally like because I think it is easier to track for students and is simple to put together a translation of a final grade (see images below for final grade translation and what the shared Google sheet looked like in Statistics). It also allows you to think of the relative weight of a project or other assignments in the overall scheme (yes I know this feels closer to traditional grading, but keep in mind the goal is still working towards objectives) and give you the flexibility to grade other components using a rubric or whatever metric you prefer.

See bit.ly/MasteryTemplate for my Google Sheets templates and instructions.

See bit.ly/MasteryTemplate for my Google Sheets templates and instructions.

What’s Next?

First and foremost – the name. The community is moving away from the “mastery” term for good reason – language matters and it’s best to disassociate from anything that brings up the notion of slavery and if you resist that move you should probably ask yourself why. With that being said, I don’t exactly have a replacement term yet; “standards-based” sounds dry to me to be honest.

I will also be implementing this system in other courses, including undergraduate probability (math major course) which I will be teaching for the first time this Fall. I’ve always held that this system is best when teaching a course that you’ve taught before because you are more familiar with the content objectives but I am confident enough in my abilities at this point to extract appropriate objectives to set up and then execute the system.

Ungrading. This is a big shift from standards-based grading that I will also be implementing this semester in my redistricting course. The motivation: there is a small but growing movement in higher education to remove grades (other than final letter grade) altogether to address the existing power dynamic of grading and increase students’ metacognition and accountability and hopefully move them away from the “path of least resistance.” (“What’s the least I can do to get an A?”) The idea: students will co-construct (first week of semester) what constitutes an A, B, C, and D and grade themselves at the end of the semester. I am not planning to change my existing assignments and projects, but I will build in more regular opportunities for reflection and metacognition for them to track their own learning and progress. I do have to reserve the right to change their final grade if I do not agree with their given grade, and this can go in both directions as research shows that female students are more prone to undervalue themselves and their work in self-assessment.

I will update my progress on both fronts in my continued journey through assessment. And I don’t plan to lose sight of the bigger picture – for me, re-envisioning the mathematical experience for students means creating humanized spaces in the three key areas of (a) content, (b) pedagogy, and (c) assessment. Let’s do this.